Artificial Intelligence (AI) has become intricately woven into our daily lives, revolutionising various aspects of society. Nevertheless, alongside its transformative potential, AI also poses significant challenges, one of the most pressing being bias.

In recent years, the issue of bias in AI systems has garnered growing attention. The increased scrutiny highlights the urgent necessity to confront bias within AI technologies.

In this article, we will explore the various types of AI bias and their implications.

What is AI?

Artificial Intelligence (AI) is a set of technologies that enables computers to perform a variety of advanced functions. This includes the ability to see, understand, and translate spoken and written language, analyse data, make recommendations, and more.

What is AI Bias?

AI bias, also referred to as machine learning bias or algorithm bias, refers to AI systems that produce biased results that reflect and perpetuate human biases within a society. Bias can be found in the initial training data, the algorithm, or the predictions the algorithm produces.

Bias can happen at different points in the AI process. One main reason for bias is how data is collected. AI systems are only as good as the data they are trained on, and when this data fails to reflect the real world, the results can be skewed and unfair.

5 Types of Bias in AI

Here are four types of bias possibilities that commonly occur in AI systems.

-

Selection bias

This bias occurs when the training data is not representative of the population under study. This can happen for several reasons, like incomplete data sets or biased sampling methods.

For instance, consider AI systems trained to detect skin cancer. Suppose the data is solely gathered from individuals aged 20-50. In that case, the AI’s effectiveness for those aged 51 and above might be compromised due to selection bias stemming from inadequate data for this age group.

-

Confirmation bias

Confirmation bias is the system’s tendency to interpret new data as confirmation of one’s existing beliefs. This occurs when an AI system leans heavily on existing beliefs or trends in the data it’s trained on. It can strengthen existing biases and miss out on discovering fresh patterns or trends.

In a social media platform’s recommendation system, confirmation bias can occur when the algorithm suggests content based on a user’s past interactions.

For example, if someone frequently engages with conservative or liberal content, the algorithm may prioritise showing them similar posts. This can create an echo chamber where the user only sees content that confirms their beliefs, reinforcing their biases, and hindering exposure to diverse viewpoints.

-

Stereotyping bias

The stereotyping bias happens when an AI system reinforces harmful stereotypes.

For instance, AI image tools often perpetuate troubling stereotypes, portraying Asian women as hypersexual, Africans as primitive, leaders as men, and prisoners as Black.

-

Measurement bias

Measurement bias occurs due to incomplete data, often resulting from oversight or lack of preparation, where the dataset fails to include the entire population that should be considered.

For instance, if a college aimed to predict the factors contributing to successful graduations but only included data from graduates, the analysis would overlook factors influencing dropout rates. Hence, the findings would only be partially accurate as it does not take into account the other subset.

-

Out-group Homogeneity Bias

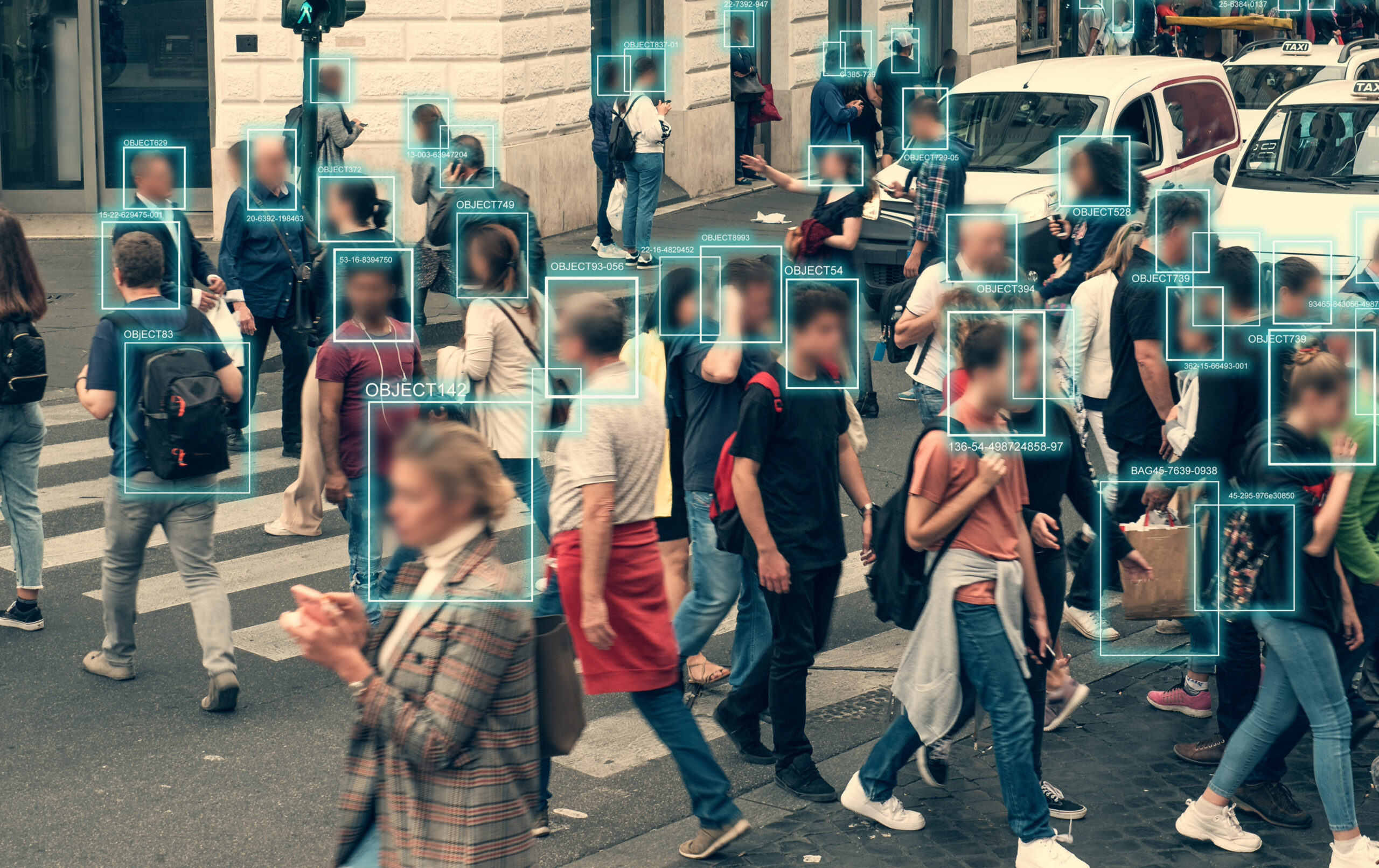

Out-group homogeneity bias occurs when an AI system struggles to differentiate individuals who are not represented well in the training data, leading to potential misclassification or inaccuracies, especially when dealing with minority groups.

An example of this bias in AI could be in a facial recognition system that has primarily been trained on images of individuals from a certain demographic group, such as Caucasians. As a result, the system may have difficulty accurately identifying individuals from other demographic groups, such as people of colour. This can lead to misclassifications or inaccuracies, especially when dealing with minority groups.

What is the Implication of Biased AI?

Biases can lead to severe repercussions, especially when they contribute to social injustice or discrimination. This is because biased data can reinforce and worsen existing prejudices, resulting in systemic inequalities.

As a consequence, it can stop people from fully taking part in the economy and society. As part of good business practices and ethics, businesses should not use systems that provide the wrong results and foster mistrust among people of colour, women, people with disabilities, or other marginalised groups.

Conclusion

In conclusion, the inspection of AI bias exposes a complex and multifaceted issue with extensive implications. From the reinforcement of harmful stereotypes to the perpetuation of social inequalities, bias in AI systems poses significant challenges in achieving fairness, transparency, and accountability.

Nevertheless, by understanding the different types of bias and their impact, we can take proactive steps to mitigate its effects and foster the development of more equitable AI technologies.